Genetic algorithm and

Neural network

I've always been intrigued by the theme of artificial intelligence. It was in 2018 that I decided to learn more about it by coding an AI that could learn to drive in a virtual environment. The AIs were coded in the Unity 3D graphics engine using C#.

All the following project used genetic algorithms.

I learned how to use them to train neural networks to perform the following tasks.

• "Learn" to shoot arrows

• "Learn" to drive

Genetic

Algorithm

I first coded my first genetic algorithm in Python. The goal was to solve the traveling salesman problem:

• Determine the shortest path that visits each city only once and ends in the starting city.

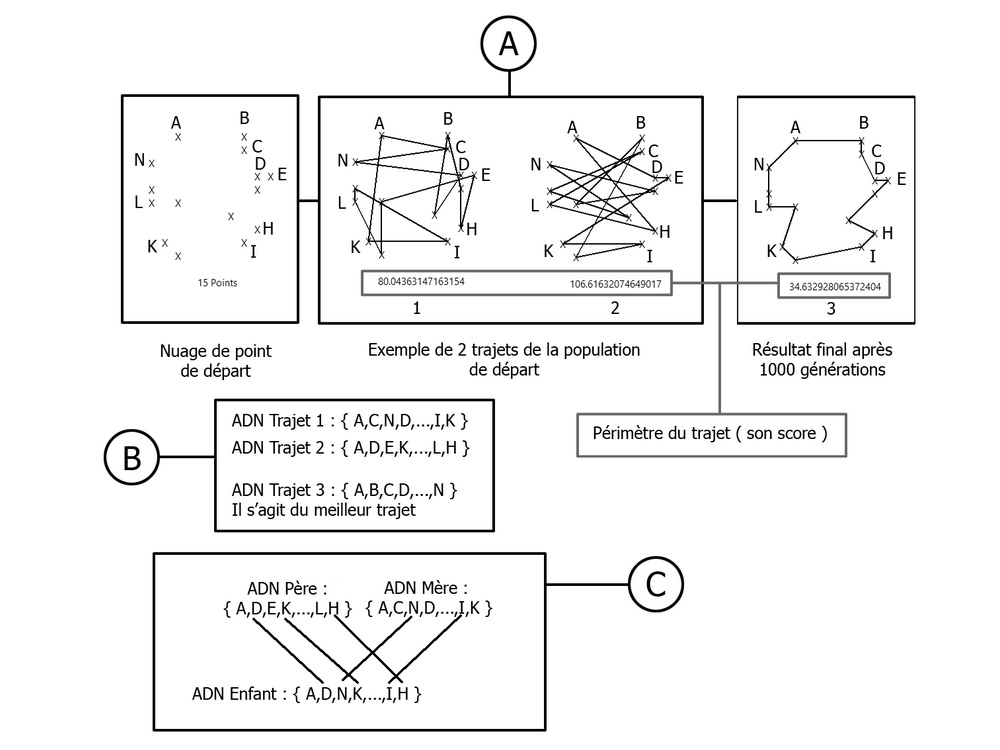

• Block (A) represents the progress of the algorithm at various key moments.

We start by creating a point cloud: 15 points randomly placed on a 10x10 pixel grid.

The genetic algorithm begins by creating an initial population, in this case, a population of 100 routes.

• Block (B) corresponds to the description of these routes. They are all characterized by a list of points whose order is randomly determined. This list of points corresponds to the DNA of a route.

To adhere to Charles Darwin's theory of evolution, we now proceed to natural selection. To do this, we rank the routes based on their length. The shorter the route, the higher it ranks.

Now that our initial population is ranked, we select the top 25 routes. For more diversity, and once again taking inspiration from nature, we also select 25 lucky ones. These 25+25 selected routes will now be able to "reproduce."

• Block (C) represents the reproduction process, which involves choosing two "selected" routes (a father and a mother) and mixing their DNA to produce 4 new routes (4 children).

The goal is to maintain a constant population (here, 100). Finally, a slight mutation is added to the DNA of the "children."

This process of selection/reproduction/mutation is repeated until there is no further improvement.

Learn to

Shoot Arrows

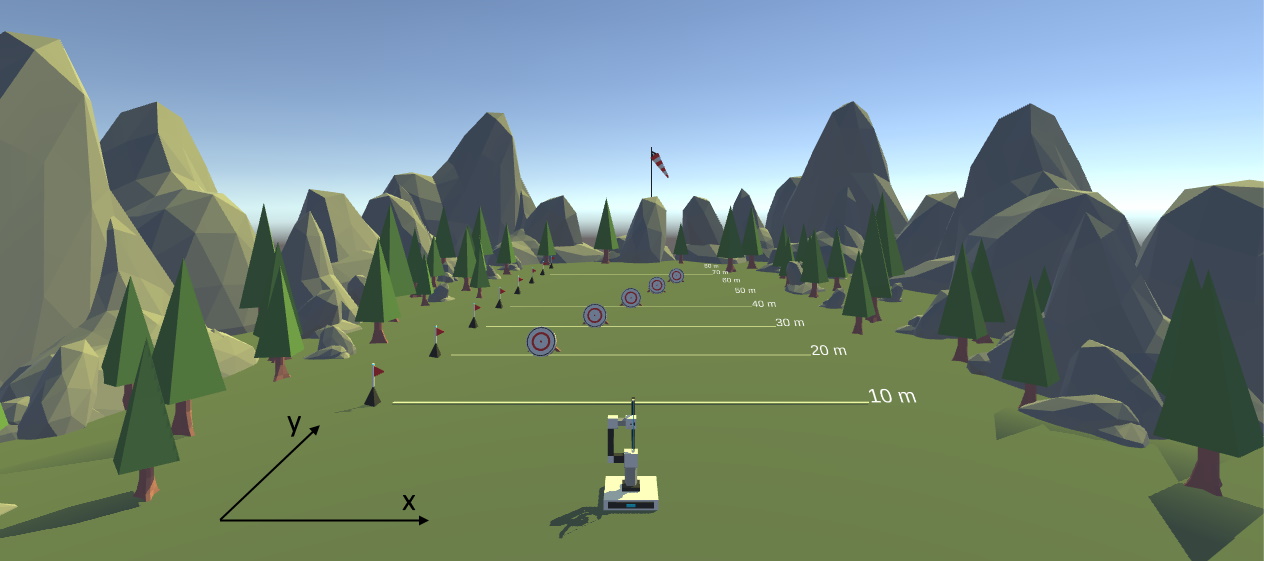

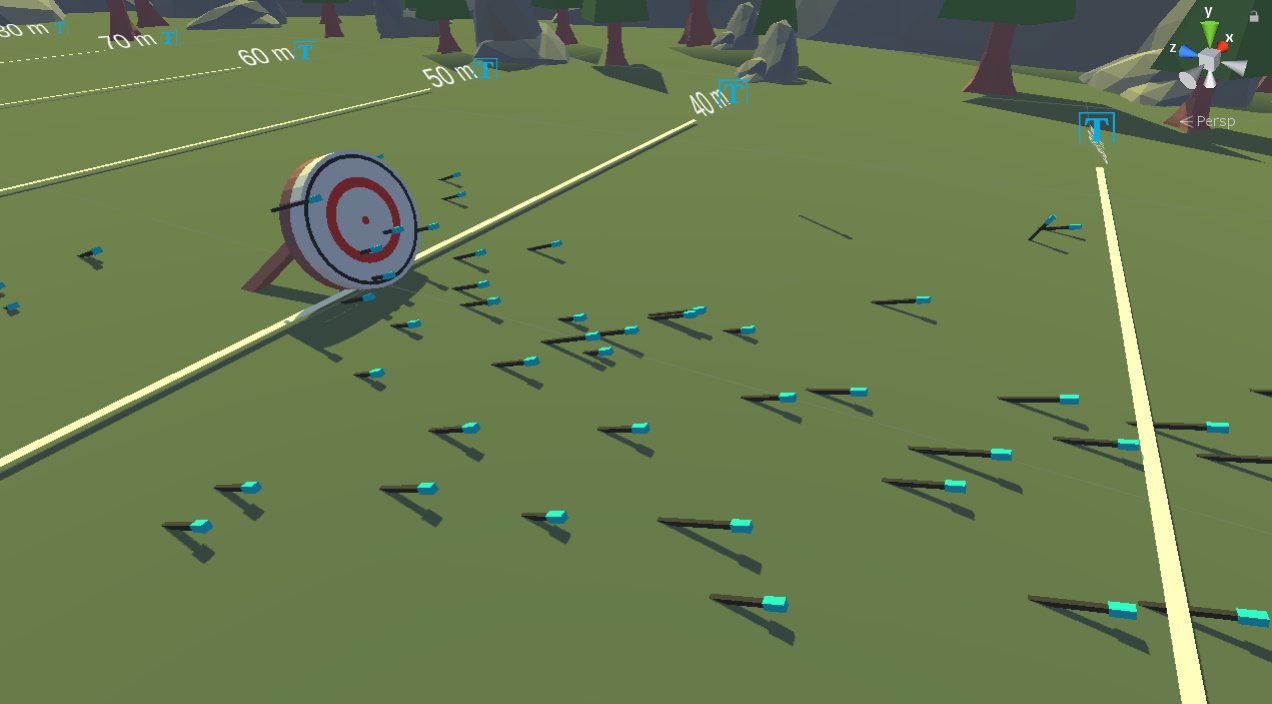

Screenshots from the project in Unity 3D:

The goal was to teach an AI how to shoot arrows. I wanted it to always hit its target from 10m to 80m away, regardless of the wind's strength and direction.

Environment

Modeling and control of the archer robot

Modeling the environment in Unity

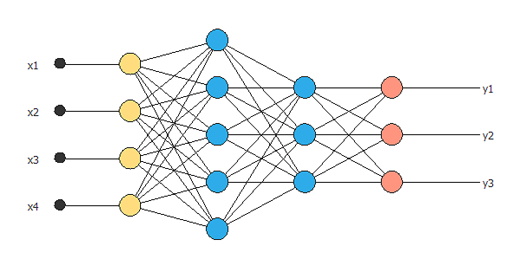

The robot is equipped with a neural network similar to this one:

Description of inputs:

• x1: corresponds to the position along the x-axis of the target

• x2: corresponds to the position along the y-axis of the target

• x3: corresponds to the wind's strength along the x-axis

• x4: corresponds to the wind's strength along the y-axis

Description of outputs:

• y1: Vertical rotation of the bow

• y2: Horizontal rotation of the bow

• y3: Power of the shot

We will train this neural network following the same principle as presented in the genetic algorithms section.

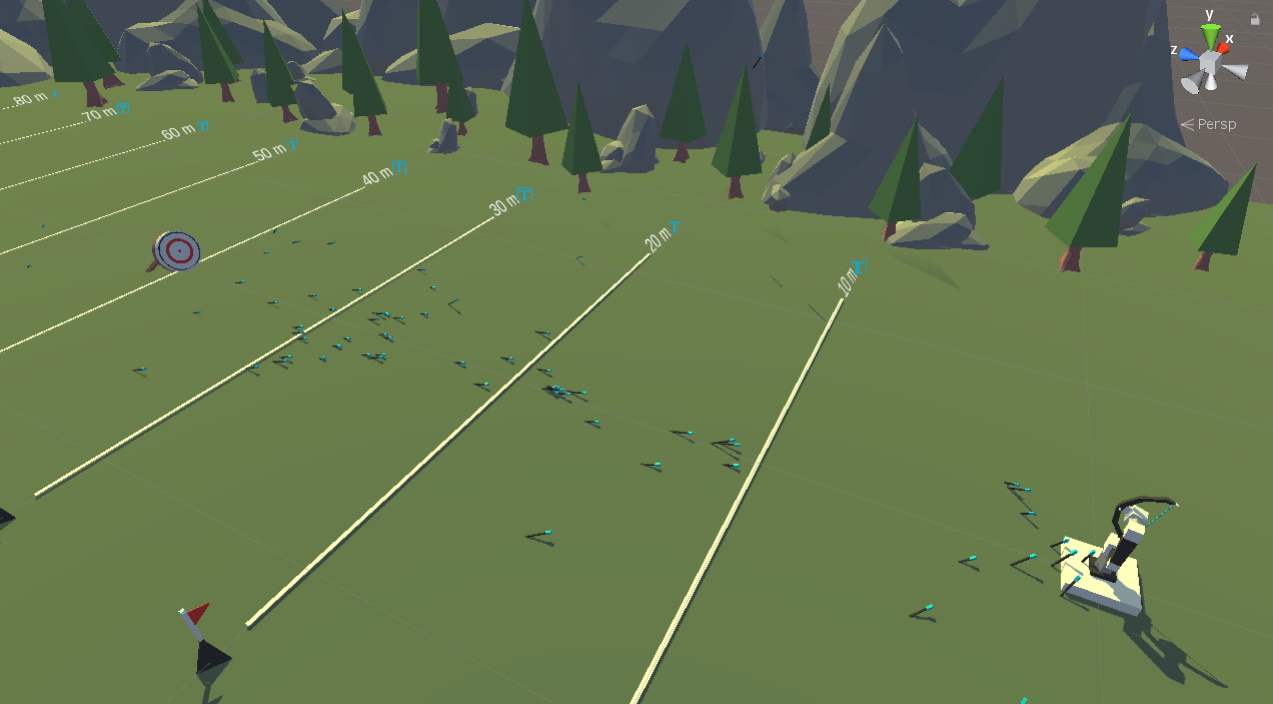

Results

First generation. Shots are completely random

After 5 generations. Concentration of shots observed

After 25 generations. Some neural networks achieve satisfactory results and hit the target

The experiment was then conducted with randomly positioned targets. The results are not yet conclusive enough. The computing power at my disposal is not sufficient for efficient progress on this project. And it seems that a genetic algorithm is not the best solution for this problem.

Learn to

Drive

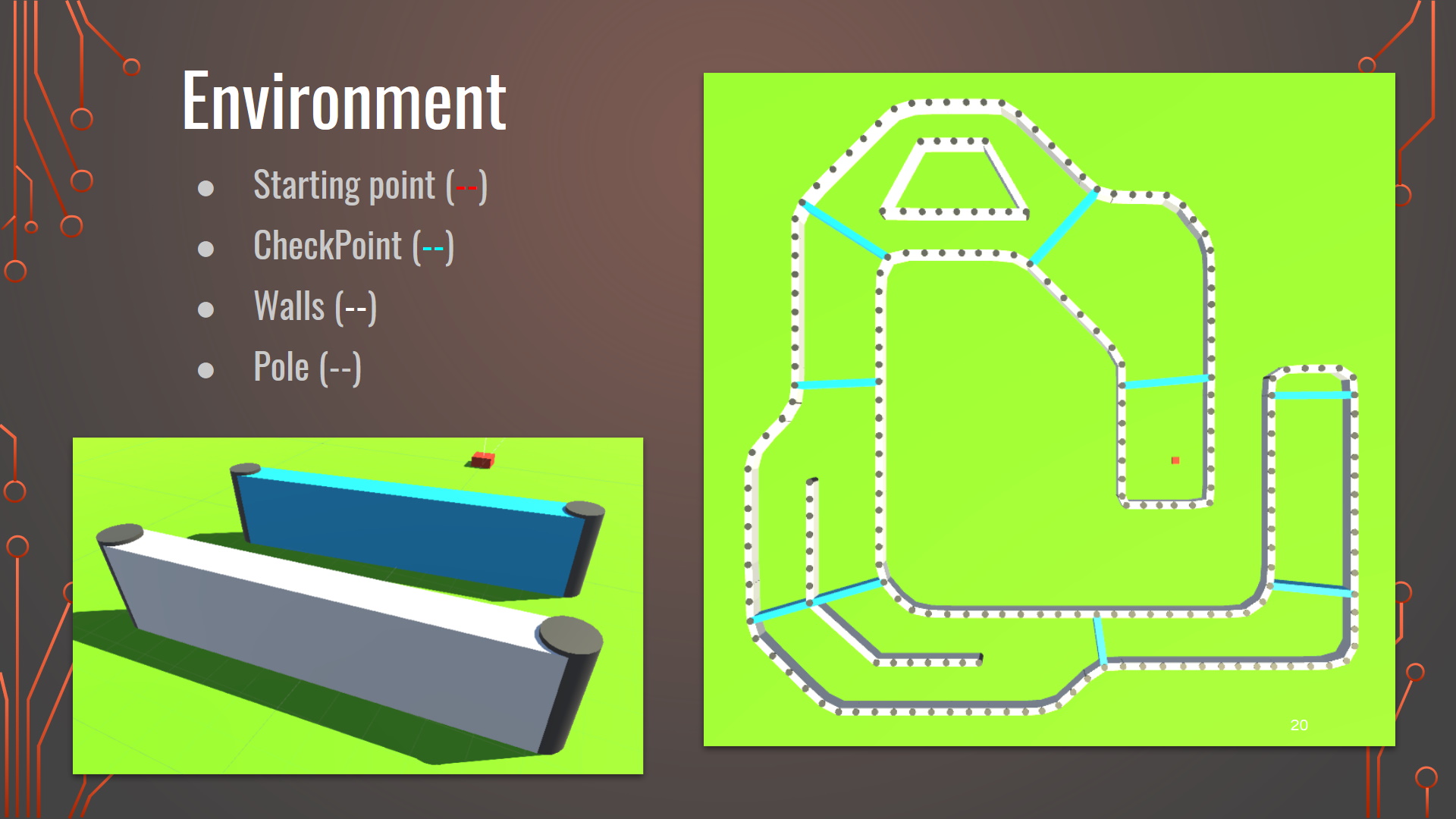

Excerpt from a project presentation:

The goal was to teach an AI to complete a circuit as quickly as possible without colliding with the environment.

I wanted to see if it was possible to use AI to find the optimal path.

Environment

First, I coded a scene in Unity where it is possible to draw the circuit you want.

The circuit must include at least:

• a starting point

• walls that form a closed circuit

• Checkpoints (the last checkpoint corresponds to the finish line)

Neural Network

Next, I coded a second scene in which you can create your vehicle, but most importantly, customize the associated neural network (NN).

You can choose:

• the number of inputs (1 - 6). These are the vehicle's distance sensors.

• the number of hidden layers (0 - 3). These are layers of neurons between the input and output of the NN.

• the number of neurons per hidden layer (1 - 5)

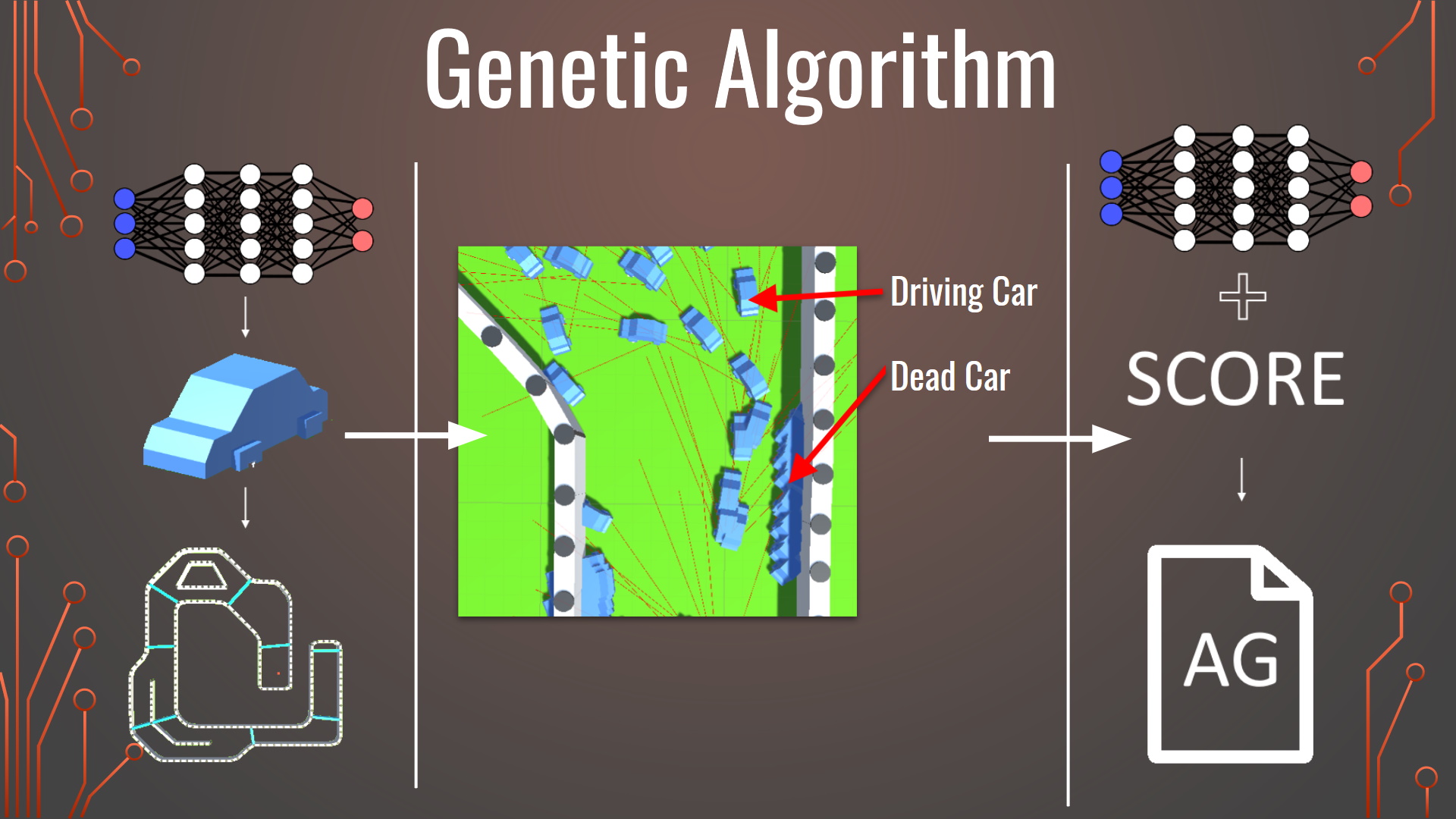

Genetic Algorithm

It is now time to code the "learning" part using a genetic algorithm:

• Our initial population consists of 100 cars equipped with their neural networks.

They are all sent onto the circuit where they try to go as far as possible.

Once they have all either completed the circuit or collided with a wall, they are ranked by their score.

(the score is calculated based on time and distance traveled).

• We can now proceed with selection, reproduction, and mutation following the same principle as presented in the genetic algorithms section.

(Ps: In the center of the slide, the red lines correspond to the sensors of the cars)

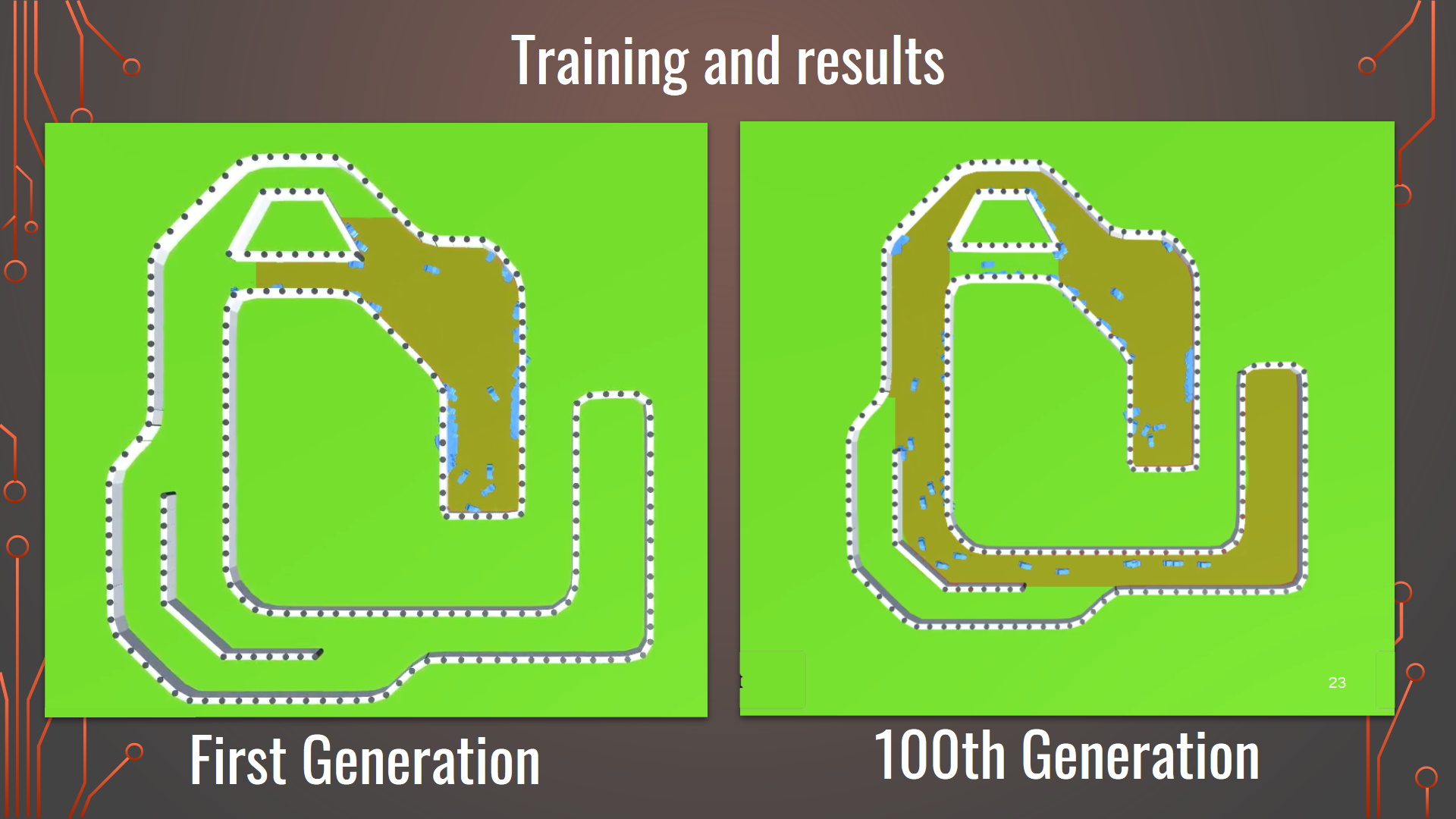

Results

Above, we can observe the evolution of the AI after 100 generations.

Many cars reach the end of the circuit, and some do so much faster than others.

Observations: It is possible to save the values of the best car's NN.

When you place this car on a completely unfamiliar circuit, it easily completes it.

In a way, the car has "learned" to drive.